commit 1ebf48704fd608324a690218f4d5a1b9e1054992

Author: C. Beau Hilton <cbeauhilton@gmail.com>

Date: Mon, 22 Jun 2020 09:15:31 -0500

migrating

Diffstat:

19 files changed, 1683 insertions(+), 0 deletions(-)

diff --git a/2018-09-01-on-making-this-website.md b/2018-09-01-on-making-this-website.md

@@ -0,0 +1,164 @@

+---

+layout: post

+title: "On the tools used to make this website"

+toc: false

+categories:

+ - technical

+tags:

+ - jekyll

+ - markdown

+ - atom

+ - hosting

+ - static web

+ - blog

+ - technical

+---

+

+I'm psyched about this little website. This post is about the tools and resources I used to make it.

+

+In summary, this is a _static website_ made with _Jekyll software_ using the code editor called _Atom_ to write posts in _Markdown language_. I also added a wishlist to the end, mostly to keep track of tools and tricks I may want to add.

+

+## Static Web

+

+[](https://blog.zipboard.co/how-to-start-with-static-sites-807b8ddfecc)

+

+Most of the internet we interact with on a day-to-day basis is "dynamic," meaning we request something from a web server (e.g. click on a Facebook post or search for something on Amazon), which then talks to a database to pull all the relevant information and fill in a template (e.g. post content + comments, or product page with images, ratings, ads, etc.). The "static" web is much more old school: ask for something and the server gives you something, no database and comparatively little processing. Whatever is on the server has to be pretty much prebuilt and ready to go. This process is much simpler, faster, and more secure. The basic protocols are old and well established, unlikely to change or break. For most online writing, such as a blog, static is the way to go, especially if it is likely to be consumed over a mobile internet connection.

+

+## Jekyll

+

+[](https://jekyllrb.com/)

+

+Jekyll is an open-source suite of open-source software for making static web pages.

+

+The basic idea is create a workflow that starts with just writing, followed by a predefined file structure and styling format to store all your stuff and make it pretty, and finally automatically translate it all into something a web browser can use. Jekyll also integrates nicely with GitHub, [which will host the site for free](https://help.github.com/articles/about-github-pages-and-jekyll/). GitHub also makes my favorite text editor, [Atom](https://atom.io/). This leads to an integrated workflow: write in Atom → build in Jekyll+GitHub → publish on GitHub.

+

+I found out about Jekyll from the [Rebecca Stone](https://ysbecca.github.io/programming/2018/05/22/py-wsi.html), who is writing the wonderful [py-wsi package](https://github.com/ysbecca/py-wsi). (This package makes massive digital pathology images tractable for deep learning.) As I read through her blog, I found [this post on Jekyll and why she switched from WordPress](https://ysbecca.github.io/programming/2017/04/29/jekyll-migration.html). I read a few more posts like hers and was converted.

+

+Some user-friendly Jekyll walkthroughs:

+

+- [Programming Historian: complete Jekyll and GitHub pages walkthrough for non-programmers](https://programminghistorian.org/en/lessons/building-static-sites-with-jekyll-github-pages)

+ - Assumes you know absolutely nothing, explains terminology and has copy-paste code plus instructions for Windows *and* Mac.

+- [WebJeda: YouTube videos](https://www.youtube.com/watch?v=bwThn0rxv7M)

+ - Modular and step-by-step. Sometimes it's nice to watch someone go through the process in real time.

+- [University of Idaho Library: workshop, with blog posts and video](https://evanwill.github.io/go-go-ghpages/0-prep.html)

+ - Comparable to Programming Historian, with the benefit of dual coverage in the blog and video. The video is from a workshop where they walked through the process in real time, from nothing to something in around an hour, and the students asked a lot of the same questions I had.

+

+### Jekyll Themes

+

+I stole the layout of this site from [vangeltzo.com](https://vangeltzo.com/index.html), whose beautiful design was Jekyll-ified (with permission!) by [TaylanTatli on GitHub](https://taylantatli.github.io/Halve/). I'm [not the only one](https://github.com/cbeauhilton/cbeauhilton.github.io/network/members) using this theme, [by](https://drivenbyentropy.github.io/) [any](https://ejieum.github.io/) [means](https://je553.github.io/).

+

+Jekyll themes are [abundant online](http://jekyllthemes.org/), generally [easy to fork](https://taylantatli.github.io/Halve/halve-theme/) ("fork" means "copy for your own use without affecting the original"), and often [well commented](https://taylantatli.github.io/Halve/posts) so they are straightforward to customize.

+

+### Why this theme?

+

+I had two basic requirements for my theme. First, it had to be [pretty](http://www.leonardkoren.com/lkwh.html). Second, I wanted a responsive split screen. "Responsive" means it can adapt itself to look nice on any device from a phone to a huge desktop monitor. The split screen confines the content to an easily readable width on the right and gives consistent navigation on the left. The 50/50 split screen is a little extreme, but I think I like it this way. If I ever want to change it to something like 66/33, it is [quite easy](https://github.com/TaylanTatli/Halve/issues/32).

+

+I'll say more about specific choices, such as color, logo, and tagline in another post.

+

+## Atom

+

+[](https://atom.io/)

+

+You can write all of your code entirely within GitHub, which has its own text editor. The above tutorials use this approach to make it easy to start. There's nothing wrong with that.

+

+A friend of mine, a [fellow CCLCM student](https://github.com/JaretK) who is a much more legit programmer than me, recommended Atom, and I fell in love with only minimal doses of digital [amortentia](http://harrypotter.wikia.com/wiki/Amortentia). It is ridiculously (almost dangerously) extensible, with community-supplied packages for an incredible breadth of programming languages and applications.

+

+For the nerds, for example, it has full support for LaTeX and all the typesetting, figure-making, citation-porn, and mathy goodness you can handle. One of my favorite workflows is to write something in Markdown to get the basics down with minimal fuss in an easier language, and then use Pandoc to convert it to LaTeX, followed by any futzing with the LaTeX (if you like) before outputting to PDF. You can also (!) combine the languages rather seamlessly.

+

+Here's a [post that details a full plain-text academic workflow with Atom](http://u.arizona.edu/~selisker/post/workflow/).

+

+For the poets, it can be a gorgeous and focused writing environment with infinitely more flexibility than Word. Here's [a post that describes how one creative writer uses Atom](https://8bitbuddhism.com/2017/12/29/a-novel-approach-to-writing-with-atom-and-markdown/). The [Zen package](https://atom.io/packages/Zen) alone should entice you Hemingway types, but also take a look at the writing assistance tools detailed in previous hyperlink or the hyperlink in the next paragraph.

+

+For me, the coolest thing is that I can have [one central hub](https://medium.com/@sroberts/how-i-atom-12988bce8fce) to do it all, with very little need for Word. If I use Atom to push things to GitHub, it also nearly replaces Google Docs for keeping everything safely in the cloud (caveat for the latter:as long as I don't mind making my work public).

+

+Note from 2019: I switched to [VS Code](https://code.visualstudio.com/). It's very similar to Atom, but faster.

+

+## Markdown

+[](https://daringfireball.net/projects/markdown/)

+

+Markdown is a "text-to-HTML conversion tool for web writers [that] allows you to write using an easy-to-read, easy-to-write plain text format, then convert it to structurally valid XHTML (or HTML)." (click image or [here](https://daringfireball.net/projects/markdown/) for source).

+

+It's inspired by the way people used to mark up their plain-text emails to make them more readable, with all the "#" and "-" and "***" you can handle. Therefore, if you look at the source of something written in Markdown, it's still pretty darn legible. Those of you who have read and written medical charts, many of these are still in common use (the "#" problem list, for example).

+

+Here are examples from a great [GitHub Markdown cheatsheet](https://github.com/adam-p/markdown-here/wiki/Markdown-Cheatsheet). First is the code and then the rendered text.

+

+`Emphasis, aka italics, with *asterisks* or _underscores_.`

+Emphasis, aka italics, with *asterisks* or _underscores_.

+

+`Strong emphasis, aka bold, with **asterisks** or __underscores__.`

+Strong emphasis, aka bold, with **asterisks** or __underscores__.

+

+`Combined emphasis with **asterisks and _underscores_**.`

+Combined emphasis with **asterisks and _underscores_**.

+

+```

+Section with a hashtag:

+# H1

+```

+

+Section with a hashtag:

+# H1

+

+

+```

+Section using underline style to accomplish the same thing:

+Alt-H1

+======

+```

+

+Section using underline style to accomplish the same thing:

+

+Alt-H1

+======

+

+

+Pretty cool, eh?

+

+## OK, so what do you _actually_ do?

+

+- Install all the stuff from one of the Jekyll how-tos mentioned above, follow one of the guides to set up GitHub pages, Travis CI, and your local jekyll environment.

+- Open a new `.md` file in your favorite text editor.

+- Insert something like this at the top:

+

+```

+---

+layout: post

+title: "On the tools used to make this website"

+toc: false #table of contents

+categories:

+ - technical

+tags:

+ - jekyll

+ - markdown

+ - atom

+ - hosting

+ - static web

+ - blog

+ - technical

+---

+

+```

+

+- Write all your stuff using Markdown syntax.

+- Commit your changes to GitHub

+- Wait for Travis CI to build, fix any errors (for example, you might need to delete the `BUNDLED WITH` lines in the gemfile.lock).

+- Check out your new post!

+

+## Wishlist/possibilities

+

+

+- Clickable Zen *reading* mode (remove all hyperlinks - whether or not hyperlinks impair focus is [hotly debated](https://books.google.com/books?id=QJxeBAAAQBAJ&pg=PA79&lpg=PA79&dq=On+Measuring+the+Impact+of+Hyperlinks+on+Reading&source=bl&ots=Ih_zN17-Nh&sig=F47u2HB7nBavnD3amydmJo5wNB4&hl=en&sa=X&ved=2ahUKEwjs0Pif1Z3dAhUKXa0KHcEeCucQ6AEwCXoECAEQAQ#v=onepage&q&f=false), he said with a meta-smirk).

+- Clickable dark/light theme switch, a la [dactl](https://melangue.github.io/dactl//) (that black and white water drop button on the top right) or [Hagura](https://blog.webjeda.com/dark-theme-switch/) (the text that reads "Dark/Light" at the bottom of the page is a button), for kinder day and night reading. The Hagura author wrote a tutorial [here](https://blog.webjeda.com/dark-theme-switch/).

+- ~~Side notes, a la Tufte. [Michael Nielsen's blog](http://augmentingcognition.com/ltm.html) has one implementation, and this [Tufte-ite Jekyll theme](http://clayh53.github.io/tufte-jekyll/articles/15/tufte-style-jekyll-blog) has another. I would probably have to reduce the page split from 50/50 to at least 66/33 for this to work properly.~~

+- ~~Or: [Barefoot footnotes](https://github.com/philgruneich/barefoot) (click and they show up, like Wikipedia)~~ Barefoot works very well, check out other posts.

+- Integrate fancy plugins

+

+ - ~~[Overview of how to integrate non GitHub-approved plugins, with nice script that automates the integration](https://drewsilcock.co.uk/custom-jekyll-plugins).~~ Went with Travis CI instead, using [these instructions](http://joshfrankel.me/blog/deploying-a-jekyll-blog-to-github-pages-with-custom-plugins-and-travisci/). I ended up breaking everything and fighting with it for a day, but it seems the whole issue was that the backend process of migrating from the github.io address to beauhilton.com was not instantaneous, and as soon as it was done I could change the internal reference to <https://beauhilton.com> and it came together. All that heroic hacking, for nothing...

+ - [Academicons](https://www.janknappe.com/blog/Integrating-Academicons-with-Fontawesome-in-the-Millennial-Jekyll-template/).

+ - [Jekyll Scholar](https://gist.github.com/roachhd/ed8da4786ba79dfc4d91) and [Jekyll Scholar Extras](https://github.com/jgoodall/jekyll-scholar-extras), see [example with clickable BibTex and PDF downloads](https://caesr.uwaterloo.ca//publications/index.html) in publications page that makes me salivate.

+ - [Integrate Jupyter Notebooks seamlessly](https://bethallchurch.github.io/jupyter-notebooks-with-jekyll/) (the way people usually do it, which is probably fine and doesn't require moar code, is to write up a plain-language explanation for the blog and link to the ipynb file on GitHub).

+ - [Add estimated reading time to pages](https://github.com/bdesham/reading_time).

+ - [Add search bar](http://www.jekyll-plugins.com/plugins/simple-jekyll-search).

+ - [Make the site pictures responsive](https://github.com/robwierzbowski/jekyll-picture-tag).

+ - [Add static comments to posts that would benefit from community](https://mademistakes.com/articles/jekyll-static-comments/#static-comments).

+ - ~~Get Table of Contents working for lengthy posts such as this one.~~ Added this feature, though not in this particular post because the "# H1" Markdown examples screw it up.

diff --git a/2018-09-07-on-the-slogan.md b/2018-09-07-on-the-slogan.md

@@ -0,0 +1,87 @@

+---

+layout: post

+title: "On the pretentious Latin slogan"

+categories:

+ - medicine

+tags:

+ - history

+ - balance

+ - humility

+ - medicine

+---

+

+# sola dosis facit venenum

+

+### Or: the dose makes the poison

+

+<p></p>

+___________

+<p></p>

+

+First, two quotes, one in English and one in German.

+

+Don't worry, we'll translate the German (this isn't one of those 19th century novels that expects fluency in English, French, Latin, Italian, and German just to get through a page), and the same basic idea is found in the English quote. In German one of the words has a delightful history that is exposed when juxtaposed with an English translation.

+

+> Poisons in small doses are the best medicines; and the best medicines in too large doses are poisonous.

+

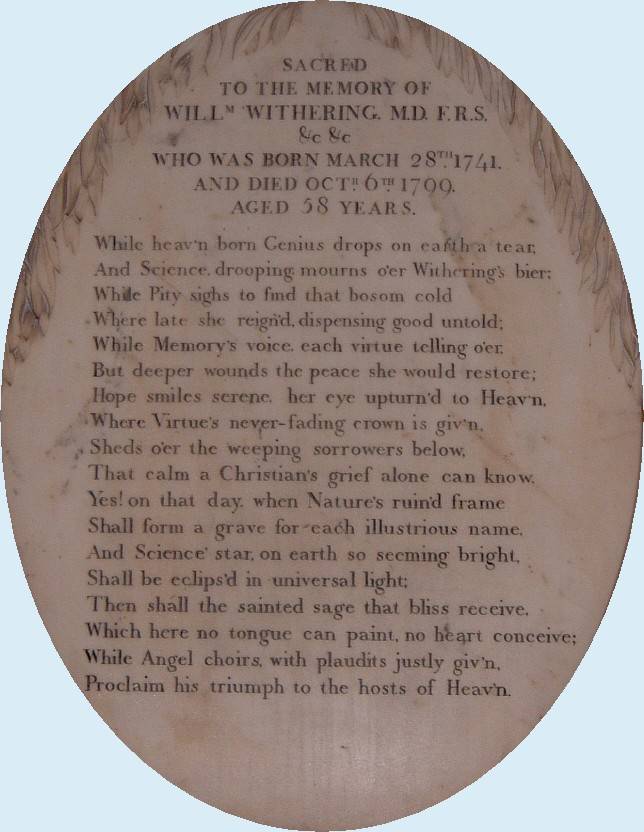

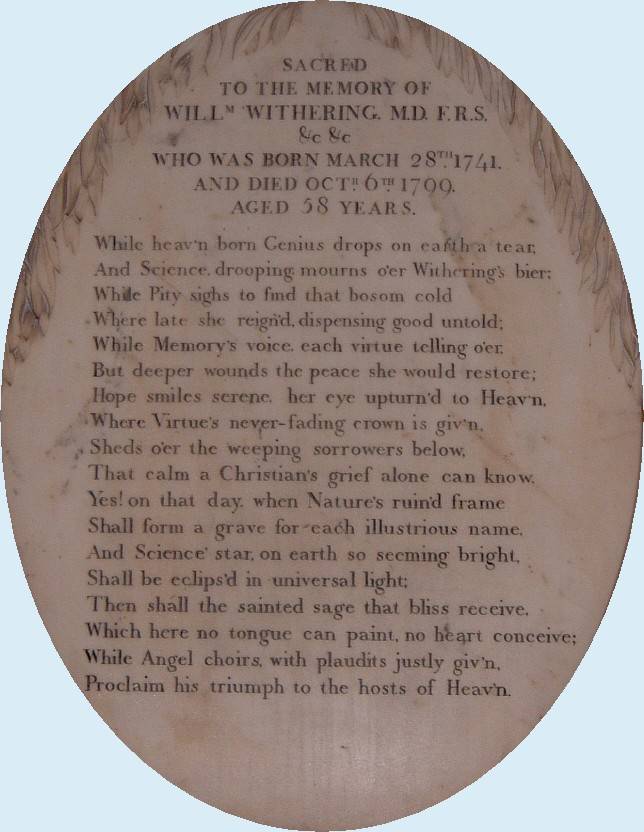

+> <cite><a href="http://theoncologist.alphamedpress.org/content/6/suppl_2/1.long">William Withering, 18th century English physician, discoverer of digitalis (sort of), and proponent of arsenic therapy.</a></cite> [^1] [^2]

+

+>Alle Dinge sind Gift, und nichts ist ohne Gift; allein die dosis machts, daß ein Ding kein Gift sei.

+

+> <cite><a href="http://www.zeno.org/Philosophie/M/Paracelsus/Septem+Defensiones/Die+dritte+Defension+wegen+des+Schreibens+der+neuen+Rezepte"> 1538, *Septem Defensiones*, by Swiss physician Philippus Aureolus Theophrastus Bombastus von Hohenheim, also known as Paracelsus, "Father of Toxicology."</a></cite>

+

+The phrase "sola dosis facit venenum," (usually rendered "the dose makes the poison" in English) is a Latinization of the German phrase above from Paracelsus. He wrote this in his "Seven Defenses" when he was fighting against accusations of poisoning his patients[^4] (malpractice court, it seems, is one of the oldest traditions in medicine).

+

+Here's my rough translation from Paracelsus' German:

+

+> All things are poison, and nothing is not a poison. Only the dose makes the thing not a poison.

+

+Throw in a couple of exclamation marks, italics, and fist pounds, and you have the makings of dialogue for the defendent in a 16th century *Law and Order*.

+

+### Paracelsus

+

+Why did this German guy have a Latin name?

+

+No one really knows. Von Hohenheim's relationship with Latin and the humanistic antiquity it represented was complicated. Despite writing books with names such as *Septem Defensiones*, he was known for refusing to lecture in Latin, preferring vernacular German instead, and the content of his books was invariably German.[^5] He publicly burned old Latin medical texts, along with their outdated ideas. My favorite version of the Paracelsus story is that the Latin name was first given to von Hohenheim by his friends, who were probably screwing with him, as they knew it would go against his iconoclastic bent. He used it (with a glint in his eye) as an occasional pen name, and it eventually stuck.

+

+In this spirit I decided on the Latin slogan. It's a bit of a post-post-modern jab at myself, medical tradition, and old bearded white men, who sometimes knew how to make fun of themselves and occasionally had good ideas despite their bearded whiteness.[^6] Also, Latin is pretty. And regardless of language, the idea this phrase represents is possibly my favorite, in medicine and in life.

+

+### Poison and other gifts

+

+The word for "poison" in German is _Gift_. English "gift," meaning "present," comes from the same Proto-Indo-European root: *ghebh*-, "[to give](https://www.etymonline.com/word/gift)." (Language is, after all, susceptible to [divergent evolution](https://en.wikipedia.org/wiki/Divergent_evolution), sometimes [amusingly](http://www.bbc.co.uk/languages/yoursay/false_friends/german/be_careful__its_a_gift_englishgerman.shtml)). This dual meaning fits nicely with the general conceit --- medicine and poison are two sides of the same coin, gifts in either case, to be used [with judgment, not to excess](https://www.lds.org/scriptures/dc-testament/dc/59.20).

+

+We can keep going down the etymologic rabbit hole: [poison](https://www.etymonline.com/word/poison) comes from Proto-Indo-European _po(i)_, "to drink," the same root that gave us "potion" and "potable." The Latin [_venenum_](https://www.etymonline.com/word/venom) from our quote, which eventually led to English "venom," comes from Proto-Indo-European _wen_, "to desire, strive for," which made its way around to _wenes-no_, "love potion," and then to more general meaning as "drug, medical potion," before finally assuming the modern meaning of a dangerous substance from an animal. I wonder if the evolution of these words from neutral or positive to negative reflects cycles of hope and disillusionment. The history of medicine is filled with well-meaning, careful clinicians who were often simply wrong, as well as charlatans ([medicasters](https://www.etymonline.com/word/medicaster), even).

+

+By contrast, the word "medicine" has undergone remarkably little change in meaning. It comes from Proto-Indo-European [_med_](https://www.etymonline.com/word/*med-), "to take appropriate measures," a root shared with "meditate," "modest," "accomodate," and, of course, "[commode](https://www.etymonline.com/word/commode)." This is a complete way to think about how to practice and receive medicine: to take appropriate measures. The difficult part is deciding what "appropriate" means.

+

+### So what?

+

+I'm going to be a doctor shockingly soon (2020, two years after this writing). After residency I will most likely specialize in either hematology-oncology (blood cancer) or palliative care,[^3] and in either case will help people decide which poison to take in an effort to feel better.

+

+Crazily enough, some of the best treatments for some of the worst diseases are classical poisons, such as arsenic for some leukemias. Arsenic is what the lucky ones get to take --- we have and regularly use other drugs that are much more dangerous. It's not just drugs we poison people with, either. If you are fortunate enough to have the right kind of cancer, enough physical strength left in reserve, and a donor to make you eligible for a bone marrow transplant, get ready to become friends with a [linear particle accelerator](https://en.wikipedia.org/wiki/Linear_particle_accelerator). We radiate the bone marrow until it gives up the ghost, then start over fresh, resurrection from the inside out.

+

+In palliative care it's not so different, even though the goal is symptom management and not cure. An illustrative anecdote: during a palliative care rotation I was trying to help the physician do some calculations she had been doing by hand. I thought, "there has to be an online calculator for this...", and pulled up several medical calculators from respected organizations. In every case, after I put in the drug and dose I wanted, the website would say something like,

+

+>"Hell no. We don't touch that stuff. We're not even going to do the calculation for you. If you need that medicine at that dose, call a palliative care doc. Consider our asses covered."

+

+We dispense derivatives of poppy that make heroin look tame, administer amphetamines as antidepressants, and [we're starting to get good at using LSD](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5867510/). Poisons, all. Medicines, all.

+

+My smartass response when people ask me why I chose medicine is "power, drugs, money, and women." It's not entirely untrue: nobody else is allowed to use these tools on humans, whether fancy knives or fancy x-rays or fancy poisons; I will always have a job and a comfortable living no matter what happens to the economy (my wife and I have had kids since undergrad, which didn't change my career goals but added an extra layer of responsibility); and chicks dig docs in uniform (the coat is white because it's hot).

+

+### To sum up:

+

+In the right hands, with respect and care, even the most dangerous substances can be tools for healing. I love that. So, for now at least, the pretentious phrase stays. Once more, with gusto: _Sola dosis facit venenum._[^7]

+

+[^2]: The article from The Oncologist that gave us the Withering quote made the unfortunate mistake of saying he was a **15th** century physician, which has, even more unfortunately, led to the persistence of the wrong century attached to his name in other publications. He was born in 1741, discovered digitalis in 1775, and died in 1799. <p> </p> Because I'm completely insufferable:

+

+[^1]: Foxglove, the common name for *Digitalis purpura*, has been used in medicine for centuries, most notably for "dropsy," the edema (fluid accumulation) associated with heart failure and other conditions. <p> Withering was the one who figured out extraction methods, dosages, and side effects, and most thoroughly estabilshed it as a medicine rather than a folk remedy or poison, but it didn't come out of nowhere. This is almost always the case: we stand on the shoulders of giants, aspirin comes from bark, etc. </p> <p> While I kind of wish the story that Withering stole it wholecloth from a local herbalist, Mother Hutton, was true, as it would fit my bias that most medicine is built on masculine appropriation of traditionally feminine arts without giving proper credit, this particular story [does not appear to have any historical basis](https://doi.org/10.1016/S0735-1097(85)80457-5). (by the way: amniotomy hooks are sharpened crochet hooks, surgical technique is mostly translated sewing technique, pharmacy is fancy herbalism --- and it's not just in medicine: is a chef a man or a woman? What about a cook? Men make art, women make crafts. Etc., ad nauseum.)

+

+[^3]: [Palliative care](https://getpalliativecare.org/whatis/) is all about helping people feel better when their disease or the treatment for it makes them feel crappy, physically and emotionally. Palliative care providers also know the legal system and end-of-life decision-making inside and out, and much of their work is counseling with worried and blindsided families who are trying to make decisions with and for their critically ill loved one.

+

+[^4]: If we put on our 21st century lenses, we see that he was indeed poisoning his patients, but in good faith rather than out of quackery or malice. Paracelsus himself [probably died from mercury intoxication](http://www.paracelsus.uzh.ch/general/paracelsus_life.html), incurred from years of practicing alchemy, which was a perfectly respectable occupation for the learned of the time.

+

+[^5]: One of the richest records of Paracelsus' thought is _The Basle Lectures_, which is written in Latin, but this is a [collection of class notes](http://www.paracelsus.uzh.ch/general/paracelsus_works.html) taken by his students, who, like all good med students of the time, studied in Latin. The rest of the best work from and on Paracelsus [is in German](http://www.paracelsus.uzh.ch/texts/paracelsus_reading.html), as far as I can tell, with [few exceptions](https://yalebooks.yale.edu/book/9780300139112/paracelsus). This is a source of irritation for this monolingual Anglophone.

+

+[^6]: Ok, Paracelsus wasn't bearded. But he should have been. I mean, look.

+

+[^7]: On the pronunciation: if you want to sound more Italian, then "soh-la dose-ees fa-cheet ven-ay-noom." If you want to have fun in a different way, make the "c" in "facit" a hard "k" and throw in a comma and some emphasis: "soh-la dos-ees, **fa-keet** ven-ay-noom." Go ahead. Try it out loud.

diff --git a/2018-10-20-a-workflow-for-remembering-all-that-science-and-also-everything.md b/2018-10-20-a-workflow-for-remembering-all-that-science-and-also-everything.md

@@ -0,0 +1,107 @@

+---

+layout: post

+title: "Remember all the things"

+categories:

+ - learning

+tags:

+ - spaced repetition

+ - desirable difficulty

+ - education

+ - learning

+ - how-to

+---

+

+# We spend so much time reading

+## and forgetting.

+

+Like most of you, I read a silly number of books, journal articles, blog posts, whatever. I forget the vast majority of what I read, almost immediately. Chances are, so do you. We're humans.

+

+The brain is a conservative organ, ruthlessly efficient. It kills memories left and right unless it has a really good reason not to.

+

+The upper limit of short term memory is 6-8 items, and most of what we learn is dumped within a day.[^12] However, there is no known upper limit on long-term memory. Let that sink in: _no known upper limit_. That is, memory is **_in-finite_**: we don't know how much we can know. We are still exploring the far reaches of long-term memory, and I'm excited. What will be possible after decades of purposeful retention of _all the interesting and useful things_?[^1]

+

+Here is a system cobbled together, with the [help of many friends](https://www.mdedge.com/ccjm/article/110825/practice-management/information-management-clinicians), to convince the brain to have a little mercy.[^10] As of October 2018, I've been doing this for a few months, and it's wonderful. It is [just cumbersome enough](https://en.wikipedia.org/wiki/Desirable_difficulty) to create conditions for learning, but not so much that I find excuses to skip the process.[^13] One difference between this system and what I used to do is the proposed longevity: instead of learning things to pass a class, or do a thing, my goal is to have a coherent way to gain and keep knowledge for life, in all its domains.[^11]

+

+In order to keep this page pretty and focus on process rather than tools, I put specific technologies and commentaries in the clickable footnotes.

+

+### Find the thing:

+- discover [^2]

+- decide (is it worth my time?) [^3]

+

+### Hoard:

+- so it's available forevermore (for citation, review, sharing) [^9]

+

+### Read:

+- annotate (clarify, connect, question) [^4]

+- highlight (_only_ what I might want to _memorize_. Nothing else.) [^5]

+

+### Review:

+- scan annotations and highlights. [^6]

+- decide what to commit to long-term memory. [^7]

+

+### Retain:

+- spaced repetition with digital notecards (key points, actionable facts, so what?, lovely quotes, etc.) [^8]

+

+If you have suggestions for improvement, questions, tools, or experiences, I would love to hear them.

+

+

+[^1]: I have a friend who has kept up on his spaced repetition tools from the first US medical licensing exam (10-15k digital flashcards, all the things you learn in the first two years of medical school), and I positively seethe with envy at how little review he will have to do when he takes the second exam.

+

+ Much, if not _most_ of my preparation time for the second exam was re-learning things I had learned for the first exam and forgotten. My proof of this was that when I missed practice questions, I could usually search my old flashcard deck and find the exact answer. How much better would life be if I could keep a larger proportion of what I learn, especially if I do it with a system that takes minutes per day?

+

+ Of course, not everything needs to be retained, and the medical licensing exams are, unfortunately, riddled with trivia (i.e. things that are highly testable, but of little use clinically, such as the chromosomal location of a very rare genetic disorder: most doctors will never see the disease, and if they do the last thing that will matter is the location on the chromosome).

+

+[^2]: In order, these are my preferred ways to find things:

+ 1. **word of mouth** (in-person, ideally, but a well-crafted newsletter is also a delight. Despite all this technology, I find the best way to address the problem of "[unknown unknowns](https://en.wikipedia.org/wiki/There_are_known_knowns)" is through respected friends and colleagues).

+ 2. **purposeful search** (goal-driven: help this patient, write this paper/blog post, scratch this itch).

+ 3. **automatic digests** ([RSS feeds](https://fraserlab.com/2013/09/28/The-Fraser-Lab-method-of-following-the-scientific-literature/) of keywords, journals, authors, etc.) I find this approach less useful for students, who have usually not yet differentiated into their specific interests, because any feeds they set up will be too noisy. The link above is great for an overview and examples, but their intake funnel is too wide for my taste. For example, they include the general feed from _Nature_ in addition to highly idiosyncratic feeds. _Nature_ has some interesting stuff and is a blast to browse, but my goal with RSS is to be targeted and _save_ time: keep the idiosyncracies and ditch _Nature_, unless you set up a filter for only the types of articles from _Nature_ you actually care about.

+

+[^3]: Quick scan, check out the pictures, skim abstract if available, consider strength of recommendation. Certain people have given me such delicious recommendations in the past that anything they now recommend skips to the head of the reading list.

+

+[^4]: For most web-first content, [Diigo](https://www.diigo.com) is great, and I use [Xodo](https://www.xodo.com/) for PDF markup.

+

+ Xodo is the best free full-featured PDF tool I have found: quick, reliable, support for search, annotation (and signatures), highlighting.

+

+ [SumatraPDF](https://www.sumatrapdfreader.org/free-pdf-reader.html) is the fastest PDF reader I have found, great for pure ctrl-F search especially with huge PDF textbooks, but achieves this by being absolutely bare-bones (no handwritten annotation, highlighting, etc.).

+

+ I also love paper and pen, and because I put the things I want to keep into spaced repetition software, I can usually recycle the paper without fear of losing something important.

+

+[^5]: Highlighting is _not_ a good method to engage with text and make it sink in. This has been studied, [repeatedly](https://eric.ed.gov/?id=EJ1021069). One of the studies cited in the linked review showed that highlighting _impairs_ comprehension.

+

+ However, if the highlight signals something meaningful and consistent, such as only the kind of information you might want to memorize, you can use the visual cue to quickly find, review, and make decisions about your next steps.

+

+ I still do most of my reading on paper, where highlighting makes review easy. In an app like [Diigo](https://www.diigo.com) or Kindle, your highlights are extracted into a separate document, which tightens review even further.

+

+[^6]: Here's where highlighting only the things you might want to memorize shows its utility.

+

+ You may also find that your annotations need annotations, e.g. a question you asked was answered later in the paper.

+

+[^7]: If something seems worth the [5-10 minutes](http://augmentingcognition.com/ltm.html) it will take to make and review a flash card, do it. If not, screw it. Also, develop a low threshold for fixing or deleting cards. This makes card creation in the first place less of a daunting task.

+

+[^8]: If you keep all your digital notecards in a tool like [Anki](http://ankiguide.com/getting-started-with-anki-srs/), you will have the spaced repetition part automated, provided you remember to open the app every day.

+

+ If you keep everything in a single Anki deck, regardless of subject, you will also gain the benefit of [interleaving](https://www.scientificamerican.com/article/the-interleaving-effect-mixing-it-up-boosts-learning/). Interleaving is one of a handful of techniques shown to improve memory and understanding, and basically involves mixing material freely without regard for category.

+

+ For example, instead of homework with all quadratic equations, mix in all the math from the whole semester in random assortment. In medicine, interleaving is particularly important: if a person comes in with chest pain, heart attack is not the only possibility, and if I had studied only cardiology that month I might fail to consider panic attacks, lung problems, digestive issues, or even blunt trauma, and send the person home after a normal EKG without even looking at their chest or asking any questions. Real life is interleaved.

+

+ So I keep my Anki cards all mixed up, such that in a single session I might see bits of useful computer code, friends' birthdays, dosing regimens for drugs, key points of journal articles, beautiful quotes, etc., in rapid succession.

+

+[^9]: [Diigo](https://www.diigo.com) for standard web fare, [Zotero](https://academicguides.waldenu.edu/library/zotero) for journal articles with PDFs.

+

+ We have free, infinitely large Google Drives (for life!) at my university, so I use the [process described here](https://docs.google.com/document/d/1dmdLyZut4rpfPDF8Mt_JhgHLtYkmYS6kggkwn1lSGQQ/edit?usp=sharing) to keep the PDFs available in the cloud. If I use this I can read and markup on my phone, computer, whatever, and it will automatically sync.

+

+[^10]: This did not come out of a vacuum. I started with spaced repetition in undergrad under the guidance of a brilliant tutor, then learned from my own students, and am continually indebted to countless classmates and generous souls online.

+

+ I am deeply grateful to [Harvard Macy Institute](https://www.harvardmacy.org/index.php) and [Dr. Neil Mehta](https://www.linkedin.com/in/neilbmehta/), in particular, for pushing me to formalize my thinking and get beyond banal "cram and regurgitate" methods for exams and classrooms. They were also the first to give me a framework, and provided many of these tools.

+

+ These ideas are not new, and have been presented in many forms: my contribution here is a personal system for putting it all together, with my preferred tools. **The key addition is spaced repetition**, which makes it much more likely for ideas to be imprinted deeply and available quickly.

+

+[^11]: For the philosophic among you: I study artificial intelligence, tools that can consider trillions of variables all at once. The goal is to outsource certain types of thinking that computers are better at, for the benefit of (in my case) people with serious illness.

+

+ This fits with the basic story of technology: outsource a thing to amplify it (I can pick up a rock: a crane can pick up a house; I can run: an airplane can fly; I can remember some things: Google remembers all the things).

+

+ A few technologies, however, are about _insourcing_, that is, developing the human element to its maximum. Spaced repetition is one of these technologies, optimized with proven algorithms to maximize how much we can learn and retain. What will be possible when highly developed human memories couple with human creativity, as well as technological support from AI and other advances? So far the research on AI suggests that the very best systems are not solely computers or solely humans, but hybrids, humans working with machines, emphasizing the strengths of both. What happens when we optimize each component? For good and for bad?

+

+[^12]: The average working memory of primates, including humans, is 4 items. The range is 1-8, ish, depending on the details of the study and participants. See [EK Miller's lab page](http://ekmillerlab.mit.edu/publications/) for links to recent work, especially **Miller, E.K. and Buschman, T.J. (2015) Working memory capacity: Limits on the bandwidth of cognition. Daedalus, Vol. 144, No. 1, Pages 112-122.** A free PDF of this paper is available on the linked website.

+

+[^13]: Free PDF article on desirable difficulty from UCLA available [here](https://bjorklab.psych.ucla.edu/wp-content/uploads/sites/13/2016/04/EBjork_RBjork_2011.pdf).

diff --git a/2018-11-01-Artificial-Intelligence-Definitions-and-Indefinitions.md b/2018-11-01-Artificial-Intelligence-Definitions-and-Indefinitions.md

@@ -0,0 +1,132 @@

+---

+layout: post

+title: "On the buzziest of buzzwords: what is AI, anyway?"

+toc: true

+image: /images/unsplash-grey-flowerbuds.jpg

+categories:

+ - AI for MDs

+tags:

+ - artificial intelligence

+ - machine learning

+ - deep learning

+ - big data

+ - definitions

+---

+

+# Introduction

+

+

+

+Artificial Intelligence is kind of a big deal.[^2] Despite real advances, particularly in medicine, for most clinicians "AI" is at best a shadowy figure, a vaguely defined ethereal mass of bits and bytes that lives in Silicon Valley basements and NYT headlines.

+

+"Bob, the AI is getting hungry."

+

+"I don't know, Jane, just throw some AI on it."

+

+"AI AI AI, I think I'm getting a headache."

+

+This article is the first of a series meant to demystify AI, aimed at MDs and other clinicians but without too much medical jargon. We begin with definitions (and indefinitions), with examples, of a few of the most popular terms in the lay and technical presses.

+

+# Artificial Intelligence

+

+There is no generally accepted definition of AI.

+

+This begins a theme that will run throughout this article, best illustrated with an analogy from Humanities 101.

+

+If you pick up a stack of Western humanities textbooks with chronologies of the arts from prehistory to the present, you will likely find a [fairly unified canon](https://en.wikipedia.org/wiki/Western_canon) (try to find a textbook that does _not_ include Michelangelo and Bach, even if it is brand new and socially aware) up until the 1970s or 1980s . At this point, scholarly consensus wanes. It has not had time to mature. As the present day approaches, the selection of important pieces and figures, and even the acknowledgement and naming of new artistic movements (e.g. "[post-postmodernism](https://en.wikipedia.org/wiki/Post-postmodernism)"), becomes idiosyncratic to the specific set of textbook writers.

+

+While the phrase "AI" has been around since [1955](https://aaai.org/ojs/index.php/aimagazine/article/view/1904), the recent explosion in tools, techniques, and applications has destabilized the term. Everyone uses it in a slightly different way, and opinions vary as to what "counts" as AI. This reality requires a certain mental flexibility, and an acknowledgement that any definition of AI (or any of the other terms discussed below) will be incomplete, biased, and likely to change.

+

+With that in mind, we offer three definitions:

+

+

+## General AI

+{:height="400px"}

+

+This is the kind of AI that can reason about any kind of problem, without the requirement for explicit programming. In other words, general AI can think flexibly and creatively, much in the same way humans can. _General AI has not yet been achieved_. [Predictions](https://hackernoon.com/frontier-ai-how-far-are-we-from-artificial-general-intelligence-really-5b13b1ebcd4e) about when it will be achieved range from the next few decades, to the next few centuries, to never. [Perceptions](https://www.newyorker.com/magazine/2018/05/14/how-frightened-should-we-be-of-ai) of what will happen if it is achieved range from salvific to apocalyptic.

+

+

+## Narrow AI

+{:class="img-responsive"}

+

+This is the kind of AI that can perform well if the problem is well-defined, but isn't good for much else. Most AI breakthroughs in recent years are "narrow," algorithms that can meet or exceed human performance on a specific task. Instead of "thinking, general-purpose wonder-boxes," current AI successes are more akin to "[highly specialised toasters](https://aeon.co/ideas/the-ai-revolution-will-be-led-by-toasters-not-droids)." Because most AI is narrow, and quite so, when clinicians see any article or headline claiming that "AI beats doctors," they would be wise to ask questions proposed by radiologist and AI researcher, [Dr. Oakden-Rayner](https://lukeoakdenrayner.wordpress.com/2016/11/27/do-computers-already-outperform-doctors/): "What, _exactly_, did the algorithm do, and is that a thing that doctors actually do (or even want to do)?" A more comprehensive rubric for evaluating narrow AI and planning projects is available in the appendix to Brynjolfsson and Mitchell's [practical guide to AI](http://science.sciencemag.org/content/358/6370/1530.full) from _Science_ magazine.

+

+

+## AI

+

+

+We will finish with Kevin Kelly's [flexible and aware definition](https://ideas.ted.com/why-we-need-to-create-ais-that-think-in-ways-that-we-cant-even-imagine/):

+>In the past, we would have said only a superintelligent AI could beat a human at Jeopardy! or recognize a billion faces. But once our computers did each of those things, we considered that achievement obviously mechanical and hardly worth the label of true intelligence. We label it "machine learning." Every achievement in AI redefines that success as "not AI."

+

+This view of AI takes into account the continual progression of the field, in sync with the progression of humans that produce and use the technology.

+

+Kelly's quote calls to mind George R.R. Martin's [humorously sobering line](http://www.georgerrmartin.com/about-george/on-writing-essays/on-fantasy-by-george-r-r-martin/),

+ >Fantasy flies on the wings of Icarus, reality on Southwest airlines.

+

+If we flip this quote on its head a bit, we can see that real-life flight is a comprehensible thing, intellectually accessible to any person willing to put in time to learn a little physics and engineering, to the point that it becomes banal. Even for those who know nothing of the math and science, most are unmovably bored during their typical commuter flight, some fast asleep even before the roar of the tarmac gives way to the smooth and steady stream at 30,000 feet.

+

+AI is now, in the minds of many, more akin to Icarus than the 5:15 to Atlanta.

+

+In this series we hope to gain the middle ground: help the reader gain and maintain a sense of possibility and perspective, but also understand the mundane ins-and-outs of day-to-day AI.

+

+# Machine Learning

+

+A common definition of ML goes something like,

+>Given enough examples, an algorithm "learns" the relationship between inputs and outputs, that is, how to get from point A to point B, without being told exactly how points A and B are related.

+

+This is reasonable, but incomplete. Each algorithm has its own [flavor](https://xkcd.com/2048/): assumptions, strengths, weaknesses, uses, and adherents.

+

+The simplest example generalizes well to more complex algorithms:

+

+Imagine an AI agent that is shown point A and point B of multiple cases. If it assumes a linear relationship between input (A) and output (B), which is often a reasonable approach, it can then calculate (“learn”) a line that approximates the trend. After that, all you have to do is give the AI an input, even one it hasn't seen before, and it will tell you the most likely output. This describes the basic ML algorithm known as [Linear Regression](https://www.youtube.com/watch?v=zPG4NjIkCjc).

+

+

+

+While linear regression is powerful and should not be underestimated, it depends on the [core assumption](https://xkcd.com/1725/) we outlined, that is, the data are arranged in something approaching a straight line. From linear and logistic regression through high-end algorithms such as gradient-boosting machines ([GBM](https://www.youtube.com/watch?v=OaTO8_KNcuo)s) and Deep Neural Networks (DNNs), each machine learning algorithm has certain assumptions. A major advantage of many newer algorithms is that their assumptions are far more flexible than the classic regression functions available on high school graphing calculators, but at their core they are still abstracted approximations of the real world, equations defined by humans.

+

+Since the goal of this series is to help the reader try out some machine learning with hands-on coding, we should also note here that in most cases, running a GBM is exactly as easy as running linear regression (LR), if not easier: same number of lines of code, same basic syntax. Most of the time you have to change only one or a few words to switch, for example, from GBM to LR and vice versa. Often, an algorithm such as GBM is actually _easier_ to put into play, because it does not place as many requirements on the type and shape of data it will accept ([roughly 80% ](https://www.forbes.com/sites/gilpress/2016/03/23/data-preparation-most-time-consuming-least-enjoyable-data-science-task-survey-says/) of a data science job is collecting and whipping data into something palatable to the algorithm). Complexities may come later, when fine-tuning, and interpreting and implementing findings, but those problems are by no means intractable. We'll get to that in a later post.

+

+Even "unsupervised" machine learning, wherein the algorithm seeks to find relationships in data rather than being told exactly what these relationships should be, is based on iterations of simple rules. Below is an example of an unsupervised learning algorithm called [DBSCAN](https://dashee87.github.io/data%20science/general/Clustering-with-Scikit-with-GIFs/). DBSCAN is meant to automatically detect groupings, for example, gene expression signatures or areas of interest in a radiographic image. It randomly selects data points, applies a simple rule to see what other points are "close enough," and repeats this over and over to find groups. You have to choose which numbers to use for `epsilon`: how close points have to be to share a group; and `minPts`: the minimum number of points needed to count as a group. The makers of this GIF chose 1 and 4, respectively.

+

+

+

+As you can see here, "unsupervised" machine learning is clever and useful, but not exactly "unsupervised." You have to choose which algorithm to use in the first place, and almost all algorithms have some parameters you have to set yourself, often without knowing exactly which values will be best (would `minPts = 3` have been better here, to catch that bottom right group?). It is not so different from selecting a drug and its dosage in a complex case: sometimes you will have clinical trials to help you make that decision, sometimes you have to go with what worked well in the past, and sometimes it's pure trial-and-error, aided by your clinical acumen and luck.

+

+Lastly, as always, regardless of the elegance of the algorithm, the machine can only take the data we provide. Junk in still equals [junk out](http://www.tylervigen.com/spurious-correlations), even if it goes through an [ultraintelligent washing machine](https://xkcd.com/1838/).

+

+

+## Deep Learning

+

+Deep Learning (DL) is a subset of machine learning, best known for its use in computer vision and language processing. Most DL techniques use the analogy of the human brain. A "neural network" connects discrete "neurons," individual algorithms that each process a simple bit of information and decide whether it is worth passing to the next neuron. Over time the accumulation of simple decisions yields the ability to process huge amounts of complex data.

+

+

+

+For example, the neural network may be able to tell you [whether or not something is a hotdog](https://medium.com/@timanglade/how-hbos-silicon-valley-built-not-hotdog-with-mobile-tensorflow-keras-react-native-ef03260747f3), what you probably meant [when you asked Alexa to "play Prince,"](https://developer.amazon.com/blogs/alexa/post/4e6db03f-6048-4b62-ba4b-6544da9ac440/the-scalable-neural-architecture-behind-alexa-s-ability-to-arbitrate-skills) or whether the retina shows signs of [diabetic retinopathy](https://doi.org/10.1001/jama.2016.17216). These successes in previously intractable problems led researchers and pundits to claim that DL was the breakthrough that would lead to general AI, but, in line with Kevin Kelly's fluid definition cited above, experience has now tempered these claims with [specific concerns and shortcomings](https://arxiv.org/abs/1801.00631).

+

+

+# Big Data

+

+

+

+The best definition of "big data" borders on the tautological:

+>Data are "big" when they require specialized software to process.

+

+In other words, if you can deal with it easily in Microsoft Excel, your database probably is not big enough to qualify. If you need something fancy like Hadoop or NoSQL, you are probably dealing with big data. Put simply, these applications [excel](https://www.brainscape.com/blog/wp-content/uploads/2012/10/Jj5i1Ge.jpg) at breaking massive datasets into smaller chunks that are analyzed across many machines and/or in a step-wise fashion, with the results stitched together along the way or at the end.

+

+There is no hard-and-fast cutoff, no magic number of rows on a spreadsheet or bytes in a file, and no single "big data algorithm." In general, the size of big data is increasing rapidly, especially with such tools as always-on fitness trackers that include a growing number of sensors and can yield [troves of data](https://ouraring.com/how-oura-works/), per person, per day. The major task is to separate the wheat from the chaff, the signal from the noise, and find novel, actionable trends. The [larger the data](https://www.wired.co.uk/article/craig-venter-human-longevity-genome-diseases-ageing), the more the potential: for finding something meaningful; for drowning in so many meaningless bits and bobs.

+

+# Summary

+

+AI and related terms have no completely satisfying or accepted definitions.

+

+They are relatively new and constantly evolving.

+

+Flexibility is required[^1].

+

+Behind all of the technological terms, there are humans with mathematics and computers, creativity and bias, just as there is a human inside the white coat next to the EKG.

+

+[^1]: A linguistic gem from an early AI researcher is here apropos: "Time flies like an arrow. Fruit flies like a banana." There are a [delightful number of ways to interpret](https://en.wikipedia.org/wiki/Time_flies_like_an_arrow;_fruit_flies_like_a_banana#Analysis_of_the_basic_ambiguities) this sentence, especially if you happen to be a computer. How much flexibility is too much? Too little?

+

+ The paper with the original Oettinger quote was frustratingly hard to find, as is often the case with classic papers from the middle of the 1900s. So save you the hassle, [here's a PDF](http://worrydream.com/refs/Scientific%20American,%20September,%201966.pdf). The article starts on p. 166.

+

+[^2]: Lest you think I've lost perspective on what really matters, here's a [comparison of the Google search trends over time for "Artificial Intelligence" and "potato."](https://trends.google.com/trends/explore?date=all&q=%2Fm%2F0mkz,%2Fm%2F05vtc) Happy Thanksgiving.

diff --git a/2019-06-10-python-write-my-paper.md b/2019-06-10-python-write-my-paper.md

@@ -0,0 +1,132 @@

+---

+layout: post

+title: "Python, Write My Paper"

+toc: false

+image: /images/unsplash-grey-flowerbuds.jpg

+categories:

+ - AI for MDs

+tags:

+ - coding

+ - python

+ - fstring

+ - laziness

+---

+

+{:class="img-responsive"}

+

+Computers are good at doing tedious things.[^1]

+

+Many of the early advances in computing were accomplished to help people do tedious things they didn't want to do, like the million tiny equations that make up a calculus problem.[^2] It has also been said, and repeated, and I agree, that one of the three virtues of a good programmer is laziness.[^3]

+

+One of the most tedious parts of my job is writing paragraphs containing the results of lots of math relating to some biomedical research project. To make this way easier, I use a core Python utility called the `f-string`, in addition to some other tools I may write about at a later date.[^4]

+

+## The problem

+

+First, here's an example of the kinds of sentences that are tedious to type out, error prone, and have to be fixed every time something changes on the back end (--> more tedium, more room for errors).

+

+"In the study period there were 1,485,880 hospitalizations for 708,089 unique patients, 439,696 (62%) of whom had only one hospitalization recorded.

+The median number of hospitalizations per patient was 1 (range 1-176, [1.0 , 2.0])."

+

+The first paragraph of a results section of a typical medical paper is chock-full of this stuff. If we find an error in how we calculated any of this, or find that there was a mistake in the database that needs fixing (and this happens woefully often), all of the numbers need replaced. It's a pain.

+How might we automate the writing of this paragraph?[^5]

+

+## The solution

+

+First, we're going to do the math (which we were doing anyway), and assign each math-y bit a unique name. Then we're going to plug in the results of these calculations to our sentences.

+If you're not familiar with Python or Pandas, don't worry - just walk through the names and glance at the stuff after the equals sign, but don't get hung up on it.

+The basic syntax is:

+

+```python

+some_descriptive_name = some_dataset["some_column_in_that_dataset"].some_mathy_bit()

+```

+

+After we generate the numbers we want, we write the sentence, insert the code, and then use some tricks to get the numbers in the format we want.

+

+In most programming languages, "string" means "text, not code or numbers." So an `f-string` is a `formatted-string`, and allows us to insert code into blocks of normal words using an easy, intuitive syntax.

+

+Here's an example:

+

+```python

+name_of_word_block = f"""Some words with some {code} we want Python to evaluate,

+maybe with some extra formatting thrown in for fun,

+such as commas to make long numbers more readable ({long_number:,}),

+or a number of decimal places to round to

+({number_with_stuff_after_the_decimal_but_we_only_want_two_places:.2f},

+or a conversion from a decimal to a percentage and get rid of everything after the '.'

+{some_number_divided_by/some_other_number*100:.0f}%)."""

+```

+

+First, declare the name of the block of words. Then write an `f`, which will tell Python we want it to insert the results of some code into the following string, which we start and end with single or triple quotes (triple quotes let you break strings into multiple lines).

+Add in the code within curly brackets, `{code}`, add some optional formatting after a colon, `{code:formatting_options}`, and prosper.

+

+As you can see from the last clause, you can do additional math or any operation you want within the `{code}` block. I typically like to do the math outside of the strings to keep them cleaner looking, but for simple stuff it can be nice to just throw the math in the f-string itself.

+

+Here's the actual code I used to make those first two sentences from earlier. First the example again, then the math, then the f-strings.[^7]

+

+"In the study period there were 1,485,880 hospitalizations for 708,089 unique patients, 439,696 (62%) of whom had only one hospitalization recorded.

+The median number of hospitalizations per patient was 1 (range 1-176, [1.0 , 2.0])."

+

+```python

+n_encs = data["encounterid"].nunique()

+n_pts = data["patientid"].nunique()

+

+pts_one_encounter = df[df["encounternum"] == 1].nunique()

+min_enc_per_pt = df["encounternum"].min()

+q1_enc_per_pt = df["encounternum"].quantile(0.25)

+median_enc_per_pt = df["encounternum"].median()

+q3_enc_per_pt = df["encounternum"].quantile(0.75)

+max_enc_per_pt = df["encounternum"].max()

+

+sentence01 = f"In the study period there were {n_encs:,} hospitalizations for {n_pts:,} unique patients, {pts_one_encounter:,} ({pts_one_encounter/n_pts*100:.0f}%) of whom had only one hospitalization recorded. "

+sentence02 = f"The median number of hospitalizations per patient was {median_enc_per_pt:.0f} (range {min_enc_per_pt:.0f}-{max_enc_per_pt:.0f}, [{q1_enc_per_pt} , {q3_enc_per_pt}]). "

+```

+

+If you want to get real ~~lazy~~ ~~fancy~~ lazy, you can combine these sentences into a paragraph, save that paragraph to a text file, and then automatically include this text file in your final document.

+

+```python

+paragraph01 = sentence01 + sentence02

+results_text_file = "results_paragraphs.txt"

+with open(results_text_file, "w") as text_file:

+ print(paragraph01, file=text_file)

+```

+

+To automatically include the text file in your document, you'll have to figure out some tool appropriate to your writing environment. I think there's a way to source text files in Microsoft Word, though I'm less familiar with Word than other document preparation tools such as LaTeX. If you know how to do it in Word, let me know (or I'll look into it and update this post).

+

+Here's how to do it in LaTeX. Just put `\input` and the path to your text file at the appropriate place in your document:[^6]

+

+```latex

+\input{"results_paragraphs_latex.txt"}

+```

+

+With this workflow, I can run the entire analysis, have all the mathy bits translated into paragraphs that include the right numbers, and have those paragraphs inserted into my text in the right spots.

+

+I should note that there are other ways to do this. There are ways of weaving actual Python and R code into LaTeX documents, and RMarkdown is a cool way of using the simple syntax of Markdown with input from R. I like the modular approach outlined here, as it lets me just tag on a bit to the end of the Python code I was writing anyway, and integrate it into the LaTeX I was writing anyway. I plan to use this approach for the foreseeable future, but if you have strong arguments for why I should switch to another method, I would love to hear it, especially if it might better suit my laziness.

+

+Addendum: As I was writing this, I found a similar treatment of the same subject. It's great, with examples in R and Python. [Check it out](https://jabranham.com/blog/2018/05/reporting-statistics-in-latex/).

+

+[^1]: _Automate the Boring Stuff_ by Al Sweigart is a great introduction to programming in general, and is available for free as a [hypertext book](https://automatetheboringstuff.com/). It teaches exactly what its name denotes, in an interactive and easy-to-understand combination of code and explanation.

+

+[^2]: I'm revisiting [Walter Isaacson's _The Innovators"](https://en.wikipedia.org/wiki/The_Innovators_(book)), which I first listened to before I got deeply into programming, and on this go-through I am vibing much harder with the repeated (and repeated) (and again repeated) impetus for building the first and subsequent computing machines: tedious things are tedious.

+

+[^3]: The other two are impatience and hubris. Here is one of the [most lovely websites on the internet](http://threevirtues.com/)

+

+[^4]: For example, TableOne, which makes the (_incredibly_ tedious) task of making that classic first table in any biomedical research paper _so much easier_. Here's a link to [TableOne's project page](https://github.com/tompollard/tableone), which also includes links out to examples and their academic paper on the software.

+

+[^5]: Assign it to a resident, of course.

+

+[^6]: You may have noticed that the name of this file is "results_paragraphs_latex.txt" rather than "results_paragraphs.txt," and that's because LaTeX needs a little special treatment if you're going to use the percentage symbol. LaTeX uses the percentage symbol as a comment sign, meaning that anything after the symbol is ignored and left out of the document. You have to "escape" the percentage symbol with a slash, like this: `\%`. I have this simple bit of code that converts the normal text file into a LaTeX-friendly version:

+

+ ```python

+ # make a LaTeX-friendly version (escape the % symbols with \)

+ # Read in the file

+ with open(results_text_file, "r") as file:

+ filedata = file.read()

+ # Replace the target string

+ filedata = filedata.replace("%", "\%")

+ # Write the file

+ text_file_latex = "results_paragraphs_latex.txt"

+ with open(text_file_latex, "w") as file:

+ file.write(filedata)

+ ```

+

+[^7]: You may have noticed there are two datasets I'm pulling from for this, "data," which includes everything on the basis of _hospitalizations_, and "df," short for "dataframe," which is a subset of "data" that only includes each _patient_ once (rather than a new entry for every hospitalization), along with a few other alterations that allow me to do patient-wise calculations.

diff --git a/2019-10-20-chrome-extensions.md b/2019-10-20-chrome-extensions.md

@@ -0,0 +1,142 @@

+---

+layout: post

+title: "Chrome Extensions I've Known and Loved"

+toc: true

+image: https://source.unsplash.com/xNVPuHanjkM

+categories:

+ - general tech

+tags:

+ - browsers

+ - convenience

+---

+

+A friend recently asked which Chrome extensions I use.

+I've gone through many, and will yet go through many more.

+Here are some I find useful, and some I've found useful in the past.

+

+## Extensions I Have Installed Right Now

+

+### [Vimium](https://chrome.google.com/webstore/detail/vimium/dbepggeogbaibhgnhhndojpepiihcmeb?hl=en)

+

+Navigating a modern web browser is usually done with a mouse or a finger/stylus.

+I like to use the keyboard as much as possible.

+

+Most of my browsing is for a particular piece of information I need for a patient, research project, etc.,

+and I'm going to jump from a text editor to a website and back again very quickly.

+If I can do all of that without leaving the keyboard, I feel much cooler (there may or may not be productivity bonuses).

+

+Vimium uses standard keyboard commands from the text editor Vim for navigating Chrome.

+It works on most pages.

+Vim commands take a little getting used to,

+but once you get the hang of them you'll miss them

+whenever you are deprived.

+

+Some examples:

+- `hjkl` for left, up, down, right (respectively). Seems weird at first, but try it and you'll love it in about 8 minutes. (You can also try some of it on your Gmail now - it has `jk` built in for up and down).

+- `/` for search (one less key press than `ctrl-F`!

+- `f` searches for all links on a page, and gives them a one or two letter shortcut. You type that shortcut, and it activates the link (hold shift while you type the shortcut to open it in a new tab).

+- `x` closes a tab.

+- `gt` Goes to the next Tab. `gT` Goes to the previous tab (think of this as `g-shift-t` and it's intuitive).

+

+### [ColorPick Eyedropper](https://chrome.google.com/webstore/detail/colorpick-eyedropper/ohcpnigalekghcmgcdcenkpelffpdolg?hl=en)

+

+The ability to grab an exact color from a webpage (or PDF) is very useful.

+Maybe you are preparing a presentation and would like to/are forced to use a particular color palette from your institution, or the institution you will be presenting at.

+Maybe you are making figures for an article submission and want to match styling.

+Maybe you would like to color code a document based on an image from an article.

+Whatever it is, it's nice to exactly match a color.

+

+ColorPick Eyedropper is an extension that gives you a crosshair you can place on anything open in Chrome (not just webpages)

+that will give you the code for that color in whichever format you need

+(hex code, RGB, etc.).

+

+### [Loom](https://www.loom.com/)

+

+Loom is free video recording software that can run in Chrome or on a desktop app.

+It's great for simple screencasts, which is all most of us need to get some idea across.

+One of my favorite features is that, if I can run Chrome, I can run Loom - I've recorded little screencasts on locked-down corporate computers.

+

+When I'm thinking about democratized tech,

+I'm thinking about resource-poor constraints

+(e.g. availability of machines, period)

+as well as resource-rich constraints

+(e.g. locked-down machines at your institution,

+that do not let you install or configure much at all).

+Loom is an example of technology that can help in both situations,

+as long as you have a machine modern enough to run Chrome,

+and your admin have at least allowed Chrome on the machine.

+

+

+### [Poll Everywhere for Google Slides](https://www.polleverywhere.com/app/google-slides/chrome)

+

+PollEv is awesome for audience participation in Google Slide presentations.

+You have to have the extension installed for it to work.

+I'm currently giving a lot of presentations, so I have it enabled

+(and will for the foreseeable future).

+

+### Zotero Connector (and Mendeley Importer)

+

+Mendeley used to be my go-to reference manager.

+I moved to Zotero for a variety of reasons

+(mostly: it's free, open-source, and therefore quite extensible.

+Mendeley, for example, could not approve a Sci-Hub integration.

+If you don't know what Sci-Hub is, Google "zotero sci-hub" and let me know if you are inspired or enraged, or both.).

+

+The Zotero extension both allows you to save references to your Zotero library,

+and to use Zotero plugins for reference management in Google Docs.

+

+### Chrome Remote Desktop

+

+Remote desktops are cool. Chrome's built-in solution is very serviceable.

+

+## Extensions I've Known and Loved

+

+### KeyRocket for Gmail

+

+KeyRocket is a suite of tools that help you learn keyboard shortcuts to navigate a variety of software.

+This is a Gmail extension that does just that.

+Whenever you do a thing manually (i.e. by clicking around),

+KeyRocket will put up a brief, inobtrusive popup

+(that you don't have to click on to exit)

+showing you how you could have done that with a keyboard shortcut.

+

+When you stop getting so many popups, you have become proficient with the shortcuts. At some point, you deactivate the extension and bid it a fond adieu.

+

+### [Librarian for arXiv](https://blogs.cornell.edu/arxiv/2017/09/28/arxiv-developer-spotlight-librarian-from-fermats-library/)

+

+arXiv.org is a great resource for finding and disseminating

+research, particularly in computational sciences and related areas.

+Librarian is an extension that makes getting the citations and references very easy.

+

+### [Unsplash Instant](https://chrome.google.com/webstore/detail/unsplash-instant/pejkokffkapolfffcgbmdmhdelanoaih?hl=en)

+

+This replaces your new tab screen with a randomly chosen image from Unsplash.

+Unsplash.com is a wonderful resource for freely usable, gorgeous images.

+I give a lot of presentations, so I use Unsplash for finding pretty backgrounds and illustrative images.

+It is nice to have a pretty new tab screen, and it's a nice way to find images to archive for future use (you can just click the heart icon, or manually download).

+

+P.S. Most of the splash images on my website are from Unsplash (e.g. the one at the left of this post if you're on a large screen, or at the top if you're on a small screen).

+

+I might just reactivate this extension. I'm not sure why I deactivated it.

+

+### [Markdown Viewer](https://chrome.google.com/webstore/detail/markdown-viewer/ckkdlimhmcjmikdlpkmbgfkaikojcbjk?hl=en)

+

+I think it is clear that I love Markdown.

+Markdown Viewer is an extension that allows you to preview

+what Markdown will look like when it's rendered,

+either from a local file or from a website.

+

+Now that I'm writing more Markdown, I might also turn this one back on.

+

+P.S. I turned it back on. It's awesome.

+

+### Others...

+

+These are just the ones I had disabled, but not uninstalled.

+I know I've used others, and I'm sure many of them were cool.

+My laziness at the moment is such that I'm not going to go digging around.

+

+## What about you?

+

+Do you have extensions you know and love, or have known and loved?

+Let me know, and I'll check them out.

diff --git a/2019-10-20-r-markdown-python-friends.md b/2019-10-20-r-markdown-python-friends.md

@@ -0,0 +1,86 @@

+---

+layout: post

+title: "R Markdown, Python, and friends: write my paper"

+toc: true

+image: https://source.unsplash.com/OfMq2hIbWMQ

+categories:

+ - AI for MDs

+tags:

+ - coding

+ - software

+ - laziness

+ - "R Markdown"

+ - Python

+ - Markdown

+ - "academic writing"

+---

+

+## R Markdown is my spirit animal

+

+In a [previous post]({% post_url 2019-06-10-python-write-my-paper %}) I talked about how easy it is, if you're already doing your own stats anyway in some research project, to have a Python script output paragraphs with all the stats written out and updated for you to add into your paper.

+

+The main problem with the approach I outlined was how to get those nicely updated paragraphs into the document you are sharing with colleagues.

+

+Medicine, in particular, seems wed to Microsoft Word documents for manuscripts. Word does not have a great way to include text from arbitrary files, forcing the physician-scientist to manually copy and paste those beautifully automated paragraphs. As I struggled with this, I thought (here cue Raymond Hettinger), "There must be a better way."

+

+Turns out that better way exists, and it is R Markdown.

+

+Though I was at first resistant to learning about R Markdown, mostly because I am proficient in Python and thought the opportunity cost for learning R at this point would be too high, as soon as I saw it demoed I changed my tune. Here's why.

+

+## Writing text

+- R Markdown is mostly markdown.

+ - Markdown is by far the easiest way to write plaintext documents, especially if you want to apply formatting later on without worrying about the specifics while you're writing (e.g. `#` just specifies a header - you can decide how you want the headers to look later, and that styling will automatically be applied).

+ - Plaintext is beautiful. It costs nearly nothing in terms of raw storage, and is easy to keep within a version control system. Markdown plaintext is human-readable whether or not the styling has been applied. Your ideas will never be hidden in a proprietary format that requires special software to read.

+ - I had been transitioning to writing in Markdown anyway, so +1 for R Markdown.

+- R Markdown is also a little LaTeX.

+ - LaTeX is [gorgeous](https://tex.stackexchange.com/questions/1319/showcase-of-beautiful-typography-done-in-tex-friends) and wonderful, the most flexible and expressive of all the typesetting tools (though not as fast as our old friend Groff...). It also has a steeper learning curve than Markdown, and is not so pretty on the screen in its raw form. R Markdown lets you do the bulk of your work in simple Markdown, then seamlessly invoke LaTeX when you need something a little fancier.

+- R Markdown is also a little HTML.

+ - HTML is also expressive, and can be gorgeous and wonderful. It is a pain to write. As with LaTeX, you can simply drop in some HTML where you need it, and R Markdown will deal with it as necessary.

+- R Markdown is academic-friendly.

+ - Citations and formatting guidelines for different journals are the tedious banes of any academic's existence. R Markdown has robust support for adding in citations that will be properly formatted in any desired style, just by changing a tag at the top of the document. Got a rejection from Journal 1 and want to submit to Journal 2, which has a completely different set of citation styles and manuscript formatting? NBD.

+

+## Writing code

+R Markdown, as the name implies, can also run R code.

+Any analysis you can dream of in R can be included in your document, and you can choose whether you want to show the code and its output, the output alone, or the code alone.

+People will think you went through all the work of making that figure, editing it in PowerPoint, screenshotting it to a .png, then dropping that .png file into your manuscript, but the truth is...

+you scripted all of that, so the manuscript itself made the .png and included it where it needed to go.

+

+R Markdown is by no means restricted to R code.

+This is the killer app that won me over.

+Simply by specifying that a given code block is Python,

+and installing a little tool (`reticulate`) that allows R to interface with Python,

+I can run arbitrary Python code within the document and capture the output however I want.

+That results paragraph? Sure.

+Fancy images of predictions from my machine learning model? But of course.

+

+If you don't want to use any R code ever, that's fine. R Markdown doesn't mind.

+Use SAS, MATLAB (via Octave), heck, even bash scripts - the range of language support is fantastic.

+

+## Working with friends

+R Markdown can be compiled to pretty much any format you can dream of.

+My current setup simultaneously puts out an HTML document (that can be opened in any web browser), a PDF (because I love PDFs), and (AND!) a .docx Word file,

+all beautifully formatted, on demand, whenever I hit my keyboard shortcut. I can preview the PDF or HTML as I write, have a .docx to send to my PI, and life is good.

+

+Also, because you can write in any programming language, you can easily collaborate between researchers that are comfortable in different paradigms.

+You can pass data back and forth between your chosen languages (for me, R and Python),

+either directly or by saving intermediate data to a format that both languages can read.

+

+## Automating tasks

+Many analyses and their manuscripts, especially if they use similar techniques (e.g. survival modeling), are rather formulaic.

+Many researchers have scripts they keep around and tweak for new analyses revolving around the same basic subject matter or approach.

+With R Markdown, your entire manuscript becomes a runnable program, further automating the boring parts of getting research out into the open.

+

+One of the [first introductions](https://www.youtube.com/watch?v=MIlzQpXlJNk) I had to R Markdown shared the remarkable idea of setting the file to run on a regular basis,

+generating a report based on any updated data,

+and then sending this report to all the interested parties automatically.

+While much academic work could not be so fully automated, parts of it certainly can be.

+

+Perhaps your team is building a database for outcomes in a given disease, and has specified the analysis in great detail beforehand.

+One of my mentors gives the advice that in any project proposal you should go as far as to mock up the results section,

+including all figures,

+so you make sure you are collecting the right data.

+If this was done in an R Markdown document rather than a simple Word document,

+you could have large parts of the template manuscript

+become the real manuscript as the database fleshes out over time.